NeuroAI for AI Safety

As AI systems become increasingly powerful, the need for safe AI has become more pressing. Humans are an attractive model for AI safety: as the only known agents capable of general intelligence, they perform robustly even under conditions that deviate significantly from prior experiences, explore the world safely, understand pragmatics, and can cooperate to meet their intrinsic goals. Intelligence, when coupled with cooperation and safety mechanisms, can drive sustained progress and well-being. These properties are a function of the architecture of the brain and the learning algorithms it implements. Neuroscience may thus hold important keys to technical AI safety that are currently underexplored and underutilized. In this roadmap, we highlight and critically evaluate several paths toward AI safety inspired by neuroscience: emulating the brain’s representations, information processing, and architecture; building robust sensory and motor systems from imitating brain data and bodies; fine-tuning AI systems on brain data; advancing interpretability using neuroscience methods; and scaling up cognitively-inspired architectures. We make several concrete recommendations for how neuroscience can positively impact AI safety.

Use brain data to finetune AI

Core idea

Collecting human neural data has become cheaper, safer, and more feasible at scale. Deep brain stimulation has become routine for the treatment of Parkinson’s disease and psychiatric disorders [375, 376], while intracortical brain-computer interfaces from multiple companies are undergoing clinical trials [377, 378, 379]. In clinical research, labs are collecting single-neuron recordings from epileptic patients with the help of next-generation Neuropixel probes [277, 278, 380]. New semi-invasive and non-invasive approaches like functional ultrasound also open the door for portable, continuous neural data collection from humans. Non-invasive approaches like fMRI, fNIRS, and EEG, historically dismissed because of noise issues, are now being reappraised with deep learning techniques, with impressive results.

Currently, machine learning relies on large datasets of human-derived outputs or behavior, such as choices/preferences via reinforcement learning from human feedback [383], statistics of natural language, and behavioral cloning for robotics. This typically provides a signal (consistent interactions with a user, a large corpus of written text, 3D tracking of body movements) that can be augmented with synthetic data. While these approaches have been remarkably successful, behavioral signals do not always reflect the process by which representations, decisions and movements have been created; it is possible to clone the behavior of a human by a process of imitation using shortcut learning in such a way that the system will fail catastrophically out-of-distribution [41]. Here, we evaluate the idea that rich process supervision signals derived from neural data or from complex behavioral data motivated by cognitive science could help better align AI systems with the human mind.

Why does it matter for AI safety and why is neuroscience relevant?

Humans are capable of organizing their behavior safely, including the ability to collaborate in groups towards shared goals, create shared laws and institutions, and agree on group status and trustworthiness. Recent work has focused on aligning these models to humans at the behavioral level, including through reinforcement learning from human feedback [383].

Human neural data–and more generally, complex behavioral data motivated by cognitive science–has seldom been used for labeling large-scale datasets. Latents measured from the brain could provide richer sources of information about decision-making processes than what is visible through behavior, and demonstrate higher validity when evaluated out-of-distribution [384]. Latents measured from the brain could provide an especially rich supervision signal in cases where the supervision signal would be otherwise hard to obtain:

Tasks where explicit labeling is difficult. This includes moral judgment, complex emotions, and long-term decision-making.

Tasks where it’s hard for humans to explain or demonstrate how they’ve accomplished a task. This includes automatic responses e.g. avoiding accidents in driving, intuition about social interactions, emotional understanding, non-verbal cues, and metacognition [385].

Relatedly, tasks where the target is the process by which a decision is made. This includes complex reasoning and multi-step planning [386, 387].

Cognitive processes where the key measurement of interest is the latent state itself, and its match or mismatch with behavior: e.g. motivation, intent, malice, duplicitousness, sycophancy, judgments about trust, morality, ethics, etc. [388, 389, 390, 391, 392].

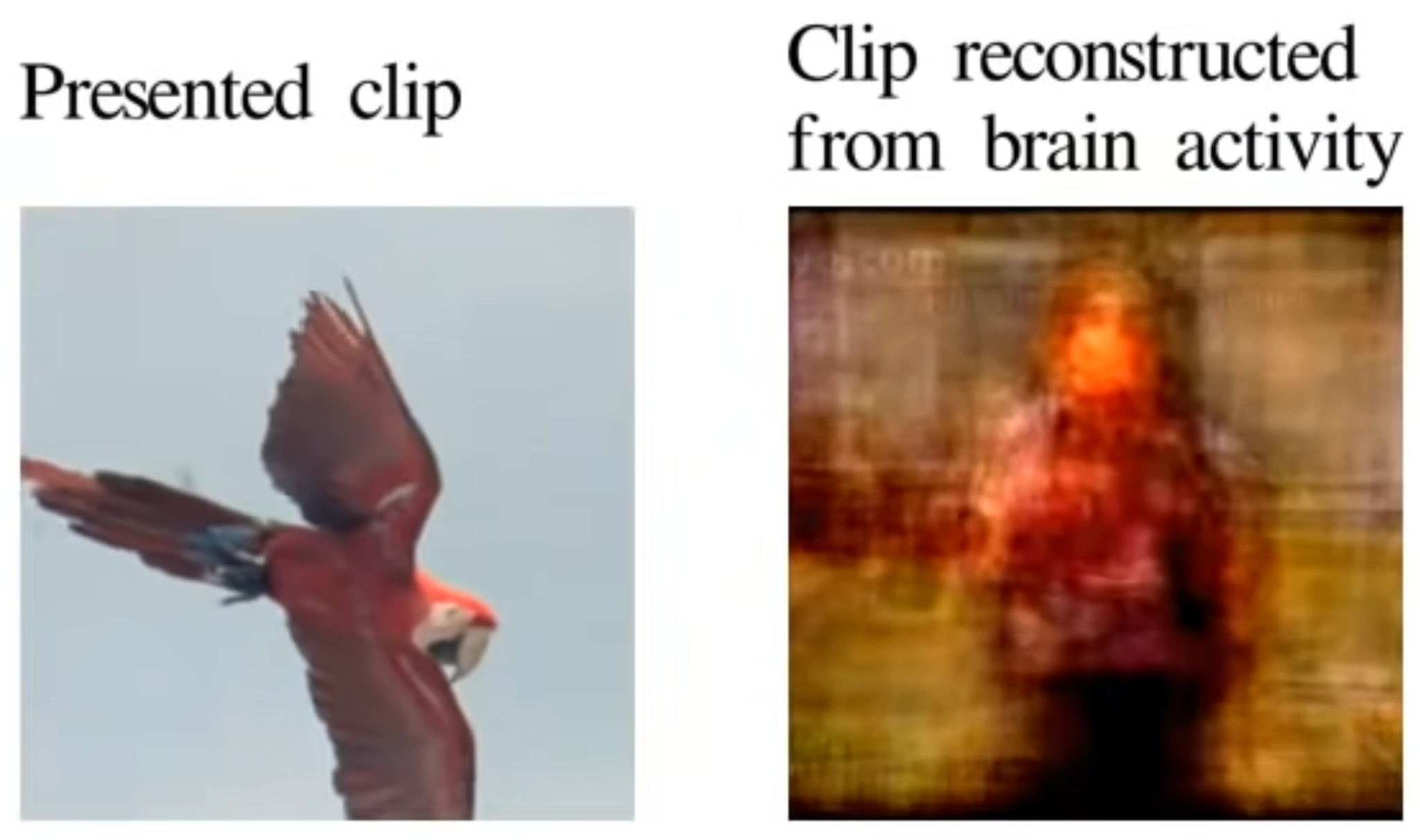

Finetuning with brain data can be thought of as a form of process supervision. Process supervision [386, 387] is a form of supervision where models are not only incentivized to obtain the correct behavioral outputs, but also to mimic the desired steps leading to the behavioral output–in other words, not only focusing on the what, but also on the how. Process supervision has proven useful in improving mathematical and general reasoning capabilities in large language models. It is also likely behind some of the reported improvements from reasoning in recent models, such as OpenAI’s o1 model [393]. Although process supervision can be partially automated by leveraging highly curated datasets and content generation [394], it is generally tedious and expensive to collect high-quality process supervision datasets. Brain data–and more generally, rich behavioral data motivated by cognitive science–reflects the dynamic process by which behavior is produced and could prove an alternative, rich reservoir of process supervision [395]. Current small-scale experiments suggest that such approaches could be more robust to domain shifts [396, 397, 398]. More generally, brain-informed process supervision1 (BIPS) has the potential to instill deeper levels of alignment between humans and machines than what is currently feasible. Furthermore, there is extensive literature on representational alignment which can help guide the development of neural alignment methods [79]. In light of these advances, we evaluate the feasibility of aligning human and AI representations in the general case.

Details

Cognitively-inspired process supervision

Before we cover brain-data-based finetuning methods, we briefly cover the related field of cognitively-inspired process supervision. Most data leveraged for machine learning is ultimately a product of human cognitive labor. Whether it’s text on the internet or image labels, ultimately, humans performed cognitive work to produce these artifacts. It’s thus worth reflecting on why exactly the final products would be misaligned with human cognitive processes.

Consider, for example, the process by which the ImageNet dataset was collected [399]. ImageNet is based on the WordNet taxonomy [400], a large lexical database of English that was manually compiled in the 1980s and 1990s from a broad range of sources. Dictionaries and thesauri were pored over by linguists and lexicographers over a decade to form a set of hierarchically organized synonym sets (synsets). The concrete nouns in these synsets were used as keywords to image search engines such as Flickr to assemble hundreds to thousands of images from each of thousands of categories. These images were deduplicated, filtered, and annotated by people on the Amazon Mechanical Turk (AMT) platform. ImageNet-1k (ILSVRC 2012) was a subset of 1.2M images from 1000 categories, chosen to be semantically distinct and visually concrete. The 1000 categories include 90 different dog breeds. Thus, while the images are a reflection of cognitive labor, and are vastly more representative of human visual experience than the datasets that preceded it, they are far from representative samples of the visual diet of any individual. Thus, it would be quite surprising if the natural categories learned from such a dataset or the internal representations derived from them were representative of human natural categories or representations [401, 402, 403].

To finetune AI systems to better reflect human natural categories or their internal representations, researchers have turned to collecting rich behavioral data inspired by cognitive science, a set of approaches that we collectively refer to as cognitively-inspired process supervision. One key technique in this domain is the use of triplet losses and similarity judgments [396, 397, 398, 404]. Human judgments about the relative similarity of stimuli are collected to fine-tune the representations of models trained conventionally. By incorporating these pairwise or triplet comparisons, models can learn to organize information in ways that more closely reflect human conceptual spaces [405, 406]. A representative approach [398] uses human triplet similarity judgments to create a teacher model, which then generates a large dataset of human-like similarity judgments on ImageNet images. This dataset is used to fine-tune vision foundation models, resulting in representations that better reflect human conceptual hierarchies. The finetuned models show improved performance on human similarity judgment tasks, better generalization in few-shot learning scenarios, increased robustness to distribution shifts, and better performance on out-of-distribution datasets.

A related approach involves the use of soft labels [396] which incorporate uncertainty [407]. Rather than using hard, binary classifications, soft labels allow for more nuanced representations of category membership and uncertainty. This better reflects the graded nature of human categorization and can lead to more robust and generalizable models. Relatedly, error consistency and misclassification agreement [408] can be used to guide models. Triplet losses, soft labels, confidence, and error-geometry-aware methods can help finetune generic representations obtained from self-supervised or supervised learning to better approximate the hierarchical structure of humans’ natural categories.

Another common form of process supervision investigated in vision models is replicating attention and eye gaze patterns to guide models [409, 410, 411, 412]. Human vision has much higher acuity in the fovea than in the visual periphery, and eye gaze patterns are indicative of which features and objects are used to make visual judgments. By training models to replicate human patterns of visual attention and gaze, these approaches aim to create AI systems that focus on robust features in a human-like way. For example, Lindsey et al. (2023) [413] fine-tune pre-trained vision models with the ClickMe dataset, where humans are asked to identify important image parts by clicking on them. Activations in convolutional layers–a proxy for attention [414]–are trained to match ClickMe annotations. The resulting models rely less on high-frequency, brittle features for classification, are better matched to single neuron representations in the inferotemporal cortex, and are more resistant to L2-norm adversarial attacks. Eye-tracking has also been extended to provide a supervision signal to natural language processing models [415], and recently to large language models [416].

Although rarely identified as such, cognitively-inspired fine-grained supervision methods are conceptually related to teacher-student distillation [417], and to process supervision [386, 387]. While collecting process supervision data can be tedious, and ripe for biases due to failures in metacognition [385], it is generally appreciated that evaluating labels is easier for humans than producing them in the first place. There is a large, and currently underexplored design space in augmenting models with automatically generated process supervision traces curated and relabeled by humans. This could potentially include enhancing models with annotated moral reasoning traces; providing feedback on visual question-answering reasoning formed by vision-language models (VLMs); providing metacognitive feedback to foundation models; and creating cooperative decision systems.

Process supervision through neural data augmentation

Neural data reflects not just the outcome of a decision but the process through which that decision was made. Could we train or finetune AI models directly on neural data as a form of process supervision [384]? Several different schemes are possible, including:

Fine-tune a sensory model to match the latent representations of neural activity. Fine-tune a vision or language model to match the representations during image viewing or podcast listening, for example using representational similarity analysis [79, 115, 418, 419], or through linear readouts [51].

Fine-tune a reasoning model so that it matches the thought process of a human on difficult tasks. Similar to how LLMs with process supervision learn to generate hidden language tokens as scratch pads [387], fine-tune LLMs to generate hidden brain tokens, then condition text generation on those hidden brain tokens.

Fine-tune a heuristic search model (e.g. Monte Carlo tree search to play Go [2] or to generate programs [420]) so that it tends to visit nodes with a frequency congruent in neural activity.

We covered fine-tuning vision models with brain data in Section 2.3.4 on neural data augmentation. Here we focus on applications to language, which offers a more direct link to semantics, reasoning and moral decision making, all relevant to AI safety. In a very early study, Alona Fyshe and colleagues [421] reported that fMRI or MEG could be used to find word representations that were better matched to behavior than conventional embeddings. However, consistent with the time period, this was based on a very small dataset (60 words) with linear word embedding methods.

It has become increasingly feasible to read language information from brain data [122, 123, 422, 423, 424, 425], opening new opportunities for brain-based fine-tuning. Moussa et al. (2004) [426] recently showed that fine-tuning speech models using fMRI recordings of people listening to natural stories ("brain-tuning") led to improved alignment with semantic brain regions and better performance on downstream language tasks. Their approach, validated across three different model families (Wav2vec2.0, HuBERT, and Whisper), not only increased alignment with brain activity in language-related areas, but also reduced the models reliance on low-level speech features. Importantly, the brain-tuned models showed consistent improvements on tasks requiring semantic understanding, such as speech recognition and sentence-type prediction, while maintaining or improving performance on phonetic tasks.

While these results are highly promising, we have yet to see a clear demonstration of brain data used to finetune models in a way directly relevant to AI safety. [427] reported null results on fine-tuning large language models on fMRI data collected in the context of moral decision-making. This may simply be due to the paucity of data that was used for fine-tuning [428]. There is a largely unexplored design space around fine-tuning AI models with large-scale brain data.

Evaluation

Fine-tuning vision and vision-language models with cognitively-inspired behavioral data and neural data has been demonstrated to improve out-of-distribution robustness, one-shot learning, and alignment to human natural categories. Noninvasive neural and cognitive data augmentation is a natural complement to building digital twins of vision systems (Section 2); while digital twins can capture the micro-geometry of image recognition in non-human animals, neural and cognitive data augmentation can capture the macro-geometry of visual representations with a higher level of fidelity than has been possible through conventional labeling pipelines. Thus, there exists a promising synergy between building digital twins of sensory systems based on invasive data and fine-tuning through neural data augmentation. This would allow the creation of models that reflect the human visual system at all relevant levels, which we hope will be investigated more thoroughly in the near future.

By contrast, there has been little work in finetuning language and auditory models with brain data, although this is starting to change [426]. Improving upon existing large-scale models trained on internet-scale datasets will likely require lengthy, high quality recordings of neural activity. Because of noise, lengthy recordings are necessary to accurately estimate a mapping between neural activity and an artificial neural network [424, 429]. This suggests recording from a small number of subjects for an unusually long period (at least tens of hours, ideally hundreds) in ideal conditions, e.g. with a headcase in fMRI with optimal coil positions and a highly optimized scanning sequence. This design, sometimes called intensive neuroimaging, is being investigated by a growing number of laboratories [174, 430]. For example, the Courtois Neuromod project recorded people watching 6 seasons–or roughly 50 hours–of the TV show Friends. Preliminary results indicate that fine-tuning auditory models on this data could lead to gains in downstream tasks [431], consistent with [426].

Before engaging in such large-scale neural data collection, however, one would need to establish a niche where neural data collection provides added value over behavioral data collection. We foresee two scenarios where this could be the case. As we alluded in the introduction, in one scenario, the information of interest cannot be elicited through behavior [432].

A second scenario is when neural data collection could win over behavioral data on a bits-per-dollar basis. That means matching behavioral data on both an information density basis and on marginal cost. This is a high bar to hit, as behavior is easy to collect at scale through crowdsourcing platforms, and it can be less noisy than neural data. Speech is estimated to transmit information at an average rate of 39 bits/s across a wide range of languages [433]. [434] estimate that a wide range of overt behaviors are capped at 10 bits/s. Do any brain data modalities approach that benchmark? We performed a broad survey of noninvasive and invasive neural data modalities in humans in the context of brain-computer interfaces (Table 4).

| BCI type | Reference | Task | Reported avg ITR (bps) |

|---|---|---|---|

| Intracortical | [435] | Handwriting decoding | 6.56 |

| Electrophysiology | [436] | Speech decoding | 13.33 |

| [122] | Speech decoding | 8.69 | |

| [437] | Cursor control (grid task) | 8.00 | |

| fMRI | [438] | Visual retrieval | 3.24 |

| [439] | Text decoding | 6.95 | |

| EEG | [440] | Free spelling | 1.31 |

| SSVEP-based EEG | [441] | Free spelling | 16.86 |

| SSVEP-based MEG+EEG | [442] | Visual decoding | 5.20 |

| SSVEP-based MEG | [442] | Visual decoding | 4.53 |

| OPM-MEG | [443] | Spelling | 1.31 |

| HD-DOT | [444] | Visual information decoding | 0.55 |

| fNIRS | [445] | Ternary classification | 0.078 |

| fUS | [446] | Movement intention decoding | 0.087 |

Keeping in mind the difficulty of comparing very different modalities, tasks, and experimental paradigms on an apples-to-apples basis, we find that the 10 bits/s benchmark is within the reach of intracortical recordings, and that fMRI is within a factor two of that. MEG and EEG state-of-the-art results are dominated by steady-state visual evoked potential (SSVEP) paradigms, which are not as directly relevant to finetuning AI systems with neural data. We note that while functional ultrasound decoding results reported are quite modest, they are based on a very small imaging volume; fields of view thousands of times larger are attainable in theory, with consequent increases in information transmission rate, which could put it above the threshold. Finally, while not included in this table because it has not been used thus far in humans, a recent preprint on a flexible thin-film micro-electrocorticography array demonstrated decoding visual stimuli at a rate of 45 bits/s [447].

Thus, methods that could conceivably hit the 10 bits/s benchmark include electrophysiological recordings, ECoG, fMRI, and functional ultrasound. fMRI has high marginal costs due to the need for dedicated rooms, technicians, and refrigerants for magnets. This leaves intracortical recordings, ECoG, and functional ultrasound as potential candidates for scaling up neural data augmentation. Conventional fMRI, which is broadly available in academic settings, can continue to serve as proof-of-concept data acquisition method for neural data augmentation schemes.

Beyond vision and language, neural data could be used for fine-tuning robotics, RL, and agentic models. A significant challenge is collecting sufficiently rich data over the long time scales necessary to work around the high noise in neural recordings. There is a long history of using games to study rich cognition [448], including board games like Othello and chess, bespoke games like Sea Hero Quest [449], or popular games like Bleeding Edge [450], Little Alchemy, and Overcooked. There has been some work in comparing the representations of neural networks trained with reinforcement learning with those obtained during games, e.g. Atari games [451], 16-bit era 2D games [452], or driving simulators [453]. However, to the best of our knowledge, there have been no attempts to finetune machine learning models on these datasets.

Collecting neural data during gameplay could provide a scalable way to obtain the large-scale paired datasets necessary to elucidate how higher cognitive processes operate in the brain, and eventually fine-tune machine learning models to match these cognitive processes. To maximize the impact on both neuroscience and AI safety, we foresee the use of open-ended, complex games that have a high loading on cognitive phenomena involving ethical behavior, cooperation, and theory of mind, and that have served as challenges for AI. This could include Minecraft [454], Diplomacy [324], Hanabi [455], poker [456] or text adventures like the Machiavelli benchmark [457].

Opportunities

Demonstrate the feasibility of fine-tuning large language models on extant fMRI data

Collect rich process supervision data–including neural data–for cognitive phenomena of interest to AI safety, including those that extend beyond vision and language: moral and social decision-making, decision-making under uncertainty, cooperation, and theory of mind

Use AI safety-relevant, ecologically valid, and intrinsically motivating games

During neural data recording, focus on long recording times (10 hours+) per subject to mitigate high noise

Reduce the barrier to entry of AI safety researchers wanting to work with neural data by the development of findable, accessible, interoperable, and reusable (FAIR) preprocessed datasets in machine-learning-friendly formats

Encourage the development of non-invasive or minimally invasive neurotechnology that can go beyond the 10 bits/s barrier, including functional ultrasound [446] and micro-ECoG [447]

We considered the alternative evocative term RLBF, reinforcement learning from brain feedback, but many of the methods we present use plain supervised learning rather than reinforcement learning.↩︎