NeuroAI for AI Safety

As AI systems become increasingly powerful, the need for safe AI has become more pressing. Humans are an attractive model for AI safety: as the only known agents capable of general intelligence, they perform robustly even under conditions that deviate significantly from prior experiences, explore the world safely, understand pragmatics, and can cooperate to meet their intrinsic goals. Intelligence, when coupled with cooperation and safety mechanisms, can drive sustained progress and well-being. These properties are a function of the architecture of the brain and the learning algorithms it implements. Neuroscience may thus hold important keys to technical AI safety that are currently underexplored and underutilized. In this roadmap, we highlight and critically evaluate several paths toward AI safety inspired by neuroscience: emulating the brain’s representations, information processing, and architecture; building robust sensory and motor systems from imitating brain data and bodies; fine-tuning AI systems on brain data; advancing interpretability using neuroscience methods; and scaling up cognitively-inspired architectures. We make several concrete recommendations for how neuroscience can positively impact AI safety.

Build biophysically detailed models

- Core idea

- Why does it matter for AI safety and why is neuroscience relevant?

- Details

- Generating whole-brain connectome datasets with electron microscopy

- Emerging scalable approaches for generating whole-brain connectome datasets

- Measuring the electrical and biochemical properties of single neurons

- Biophysical modeling of single neurons and populations of neurons

- Evaluation

- Opportunities

Core idea

Connectomics generates the structural circuit map of an organism’s nervous system, detailing neurons and their synaptic connections. Biophysical modeling aims to model the electrical and biochemical dynamics of individual neurons. Biophysically detailed models combine these two approaches to create a high-resolution, neuron-level simulation that accurately replicates local neural interactions and large-scale brain activity. It has long been argued that human biophysically detailed models would be a safe path toward general-purpose artificial general intelligence [54], as it replicates the human brain’s structure and function in a digital medium. Since it is modeled after the human mind, this brain may be more likely to possess human-like values, emotions, and decision-making processes. This inherent alignment could reduce the risk of developing AI systems whose goals diverge from human interests. However, while connectomics may represent the most direct bottom-up path to building intelligence, it may not be the most efficient approach–just as achieving powered flight did not require replicating birds’ feathers.

Why does it matter for AI safety and why is neuroscience relevant?

Biophysically detailed models are premised on the idea that the brain’s mechanisms and functionality can be faithfully reproduced in silico without the need for actual biological matter: the specific wiring of neurons and signaling mechanisms crucial for emergent phenomena such as intelligence, values, and perception can be recapitulated if reconstructed from bottom-up. Recurrent connections, diverse cell types, and precise synaptic properties are critical in neural function and information processing [230, 231]. However, this raises fundamental questions about the appropriate level of abstraction–while a perfect copy would require modeling down to atoms and molecules, higher-level approximations capturing informational or biophysical dynamics could preserve core functionality. This abstraction choice has profound practical implications: simulating detailed biochemical processes that occur naturally and efficiently in biological neural tissue may require enormous computational resources when implemented on traditional computing hardware, potentially demanding infrastructure-scale energy requirements that could make whole-brain modeling impractical at increasing levels of biological detail. Biophysically detailed models take an implementation-maximalist view of AI safety: if we can simply model a whole human brain at the biophysical level, we could have a more human-like AGI. Alternatively, if these models were to fail, it may fail in more human-like ways. Steps along this path include emulating whole mouse brains and certain subsystems or processes. We could apply the same intuitions when reasoning about biophysically detailed models as we do when reasoning about humans; we could apply existing moral frameworks to modeled minds; and we could collaborate naturally with these models.

The difficulties associated with these models are both technical and conceptual. Key technical barriers include long timelines to map an entire human connectome, difficulty in scaling tools to probe single neuron function, and the amount of compute required to simulate billions of interconnected neurons. Conceptually, questions remain about what neural properties must be directly measured versus inferred, what can be learned from measurements, and what additional constraints are needed for accurate simulation. These challenges have generally made this approach less appealing in the short term (<7 years). 16 years after the original biophysically detailed model roadmap [54, 232], and in light of very significant advances in using the fly connectome to understand how the fly’s brain controls its behavior [152, 233] and new optical microscopy approaches that could solve some of these challenges, it may be time to revisit this approach.

Details

Cellular-resolution biophysically detailed models rest on three major foundations: extracting cellular-level structure at the whole brain scale, capturing representation of electrophysiological and biochemical processes in neurons and synaptic connections, and simulating these at scale. Achieving the latter appears to be within reach, as simulations of networks of neurons at the scale of the human brain (~86 billion neurons, ~100 trillion synapses) have been recently demonstrated [234, 235], at least with point-neuron approaches. Therefore, data availability–the circuit architecture in terms of cells and their connections and the associated physiology– is the main bottleneck for these models.

Below we describe two approaches to structural and molecular mapping, electron microscopy (EM) and optical microscopy. Whereas the circuit architecture of the brain will need to be characterized via connectomic approaches, measuring physiology directly from every single cell and synapse in the same brain appears impractical to impossible [236]. Instead, one will likely need to combine more targeted physiological and multi-omic measurements of cellular and synaptic properties from diverse cell types and in many different brain areas with ML techniques, which will assign physiological properties to cells and synapses from the connectomic imaging data [237]. Together, these approaches have the potential to create highly detailed and accurate biophysical models.

Generating whole-brain connectome datasets with electron microscopy

The C. elegans connectome was first published in 1986 [239] after a decade of painstaking

work. Advances in microscopy and artificial intelligence have made

it significantly easier to generate large-scale neural

connectivity datasets. Recently published connectomes include a 1

mm

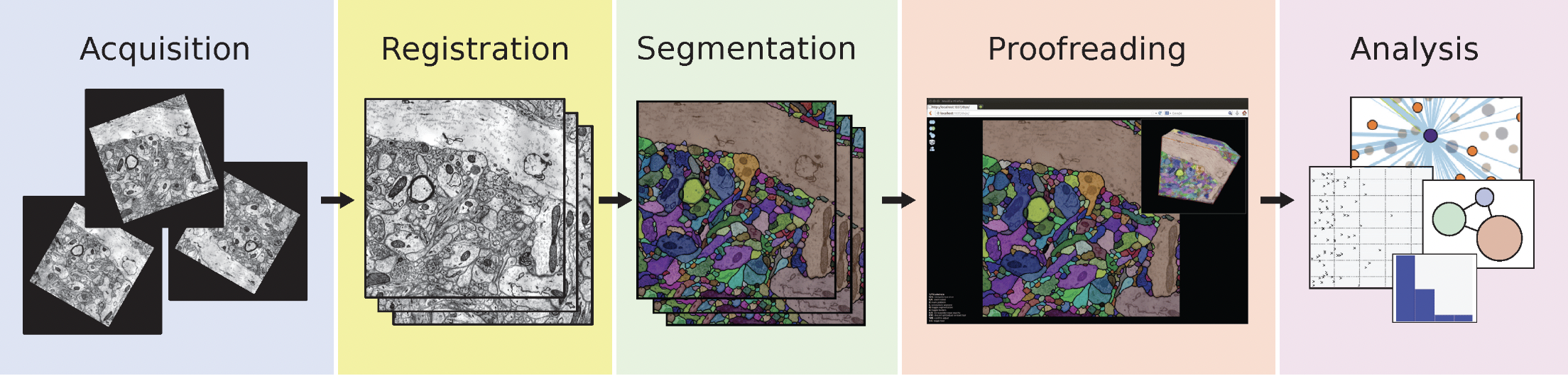

The general pipeline for whole-brain connectomics consists of five major stages (Figure 14). First is data acquisition, where high-resolution electron microscopy images are collected of brain tissue sections. These images then undergo registration to align consecutive sections into a coherent 3D volume. The third stage involves segmentation, where computational methods are used to identify and label distinct cellular structures, typically using machine learning approaches. This is followed by proofreading, where human experts verify and correct automated segmentation results. Finally, the validated reconstruction enables various analyses of neural connectivity and network properties, ultimately to be used as an input for simulation.

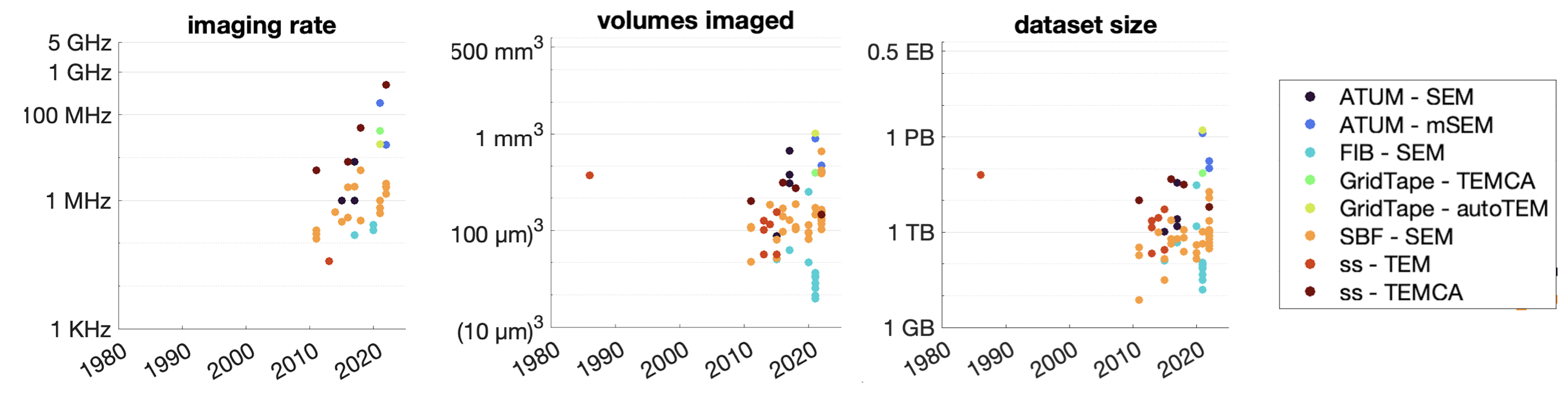

Connectomics requires imaging methods that can resolve individual synapses. To accurately capture synaptic structures, we must resolve features that are typically around 20-30 nm in size, for example, the distance between pre- and postsynaptic membranes. This is beyond the diffraction limit resolvable by visible and near ultraviolet light in conventional microscopy. Instead, scans are typically captured by volume electron microscopy (vEM), in particular serial section transmission electron microscopy (ssTEM) and scanning electron microscopy (SEM) of brain slices typically less than 100 nm thick. Imaging must be fast to feasibly scale to the volume of the human brain, which is ~1300 cubic centimeters, or 1.3M cubic millimeters. The fastest reported net imaging rate with vEM is 0.3 gigapixels per second, which could theoretically image a cubic millimeter in 5 weeks at a 3 nm planar resolution with an array of four microscopes [243]. Without further enhancements in throughput, we would need ~50,000 parallel microscopes to scale up to the human brain within a decade.

Importantly, post-processing (registration, segmentation, and proofreading) has been historically the most time-consuming and expensive part of the process. Although machine-learning enabled methods such as flood-filling networks [244] and local shape descriptors [245] for automated segmentation have improved the efficiency of synaptic tracing in terms of GPU time, post-processing still requires human proofreading, at least for now. The adult fly brain connectome has benefited from tens of thousands of volunteers manually checking neuron reconstructions across slices, collectively adding up to a 33 person-year effort [147]. A recent roadmap extrapolated that whole-mouse brain connectome proofreading would require roughly 0.3-1M person-years, consuming over 97% of the entire project’s budget [232]. Straightforward extrapolation by brain volume from Figure 5 of the Wellcome Trust roadmap [232] results in an estimate ~$1.5-4.3T for a whole macaque brain and ~$20T-59T for a whole human brain with EM connectomics, or about two years of the US GDP. Clearly, massive cost reductions will be necessary to build a whole-brain human connectome within reach.

Emerging scalable approaches for generating whole-brain connectome datasets

Newer imaging modalities may overcome some of these limitations. One promising opportunity is to adapt genetic cell barcoding techniques in which millions of individual cells can be optically distinguished from each other using diverse molecular labels (barcodes) [246]. These barcodes can be expressed as unique nucleic acid sequences [247], or alternatively diverse combinations of protein variants [248]. In both cases, barcodes can be detected as color codes via sequential optical imaging, enabling neurons to “self-annotate” their identities in optical microscopy images, in contrast to single-channel grayscale stains used in electron microscopy. If labeling methods can be engineered such that barcode molecules can fill the entire cell (including difficult-to-label compartments such as thin axons and distant synaptic terminals [249]), or alternatively if barcode molecules can be universally targeted to synapses [250], barcode information could potentially be used for error correction in connectomic reconstructions, significantly reducing costs [251]. In combination with on-going advances in improved segmentation [252] and enhanced human proofreading tools [147], cell barcoding could reduce or even eliminate human proofreading as a cost bottleneck, addressing what is currently the most critical cost driver in connectomics.

Additional technical challenges must be overcome before cell-filling barcodes can be used for connectomics. Because the diffraction limit of light microscopy is too large to resolve critical nanoscale features like thin axons and dense synaptic connectivity, advanced sample preparation methods are needed to optically detect cell barcodes at high spatial resolution. By embedding whole brains in hydrogels and expanding the tissue, it is possible to overcome the diffraction limit of light microscopy and increase the effective imaging resolution [253]. Tissue expansion methods can now detect nanoscale morphological information [254] suitable for dense reconstructions, and can also detect diverse molecular labels multiplexed across multiple imaging cycles [255, 256]. A combination of these methods would yield image datasets suitable for dense connectomic reconstruction, with additional diverse information channels encoding cell identities available for error correction.

In addition to possibly removing proofreading as the biggest cost bottleneck, optical microscopy has the advantage of the microscopes themselves being significantly lower cost than electron microscopes [257]. According to a recent cost estimate, if protein cell barcoding can eliminate proofreading as the cost bottleneck, a mouse connectome might be acquired for a marginal cost of only $7M assuming an initial capital outlay of $10M, achieving approximately a 1000x cost improvement compared to the state of the art [258]. Further improvements may be possible: this estimate assumes each pixel is imaged ~100 times, or ~50 imaging cycles to read out a 100-bit barcode address space. Custom microscope designs implementing additional spectrally distinct laser lines and cameras could reduce the number of imaging cycles required. Additionally, spatially multiplexing barcode information throughout a cell via reporter islands [259] would permit exponential rather than linear readout. Together, a 125-bit address space could be read out in only 3 imaging cycles using 5 spectrally distinct laser lines and cameras, reducing the marginal cost of connectome image acquisition in this estimate by another ~20-fold. If improvements in image segmentation efficiency [245] can keep pace to manage the cost of volume reconstruction, and storage costs can be managed by on-line data processing, the marginal cost of an optical connectome could fall below $1M per cubic centimeter.

Can these approaches scale to human connectomes? It remains to be seen whether optical connectomics augmented with cell barcodes can eliminate proofreading as the bottleneck and achieve low costs. In addition, other emerging connectomics technologies, including x-ray microscopy and non-microscopic sequencing based approaches [260, 261], may yield even better performance characteristics and lower costs. Thus, a low-cost, high-quality human scale connectome could be within reach on a much shorter timeline (<10 years) at a much lower cost (<$1B) than previously predicted [232].

It is important to note that most connectomic data currently provides information about cellular-level connections but not necessarily weights or other properties of these connections, which, at least in some cases, appear to be more important for brain dynamics and computations than connections themselves [262]. Although EM data (e.g., information about synapse size) can be used to predict synaptic weights [263], and synaptic properties like kinetics and short-term plasticity of postsynaptic potentials are somewhat constrained by pre- and post-synaptic cell types [264], these relations exhibit substantial variability and have not yet been established for a vast majority of brain cell types (although optical microscopy approaches are beginning to reveal highly stereotyped patterns of synaptic connections [265] with epitope tagging). In addition, capturing rules of long-term plasticity and learning, as well as the effects of neuromodulators, is likely to be crucial for accurate whole-brain models. Lastly, non-neuronal cells like glia are typically not included in the definition of a connectome, but may be necessary for some cognitive functions [266]. Substantial challenges remain in understanding these phenomena and their diversity across cell types and brain areas. There is potential synergy with emerging optical approaches discussed above, as molecular information can be detected alongside barcode information in the same assay [267].

Measuring the electrical and biochemical properties of single neurons

Armed with a connectome, we still face the problem of reconstructing the state-dependent input-output function of each neuron. This requires additional information to be collected alongside or predicted from imaging data to provide parameters for the electrical and biochemical behavior of single neurons.

Using ex vivo tissue, methods like Patch-seq, which combine single-cell electrophysiology, morphology, and transcriptomics, link the molecularly defined cell types to their morpho-electric properties [268, 269, 270, 271, 272, 273]. Additionally, live recording techniques such as calcium imaging and large-scale electrophysiology capture dynamic, state-dependent neural activity that provides crucial information about how neurons behave in vivo [139, 274, 275, 276, 277, 278]. While it is not directly possible to establish causality from observational data, [154] hypothesize that large-scale causal perturbation experiments could be used to reverse engineer the C. elegans nervous system. Connectomes are highly informative here, as they provide a crucial constraint on the nodes that must be simultaneously stimulated and observed [218, 233, 279]. Functional data can be collected before generating the connectome from the same animal [240]. At least in animals with a high amount of stereotypy, average connectomes may sufficiently constrain the problem [151, 152, 280].

Optical approaches enable additional imaging-based data collection, including in-situ transcriptomic characterization [255], connecting such spatial transcriptomics with functional in vivo activity of individual neurons [281, 282], pan-protein labeling [283], or specific protein labeling to infer cell types. Recent advancements have also demonstrated the potential to infer cell type directly from electron microscopy data [263, 284, 285], leveraging the paired morphology and transcriptomic data obtained from Patch-seq experiments.

It is worth noting that these methods do not account for extra-synaptic signaling, such as dense-core-vesicle-dependent signaling that results in different signal propagation than predicted based on the synaptic connectivity [286]. However, molecularly annotated optical connectomes could help account for this by subcellular receptor localization. While extra-synaptic signaling has been a bottleneck in C. elegans, it is unknown to what degree extra-synaptic signaling will be problematic in larger animals.

Biophysical modeling of single neurons and populations of neurons

With connectome data and information about neuronal input-output functions, we can begin to construct biophysically detailed models. These models range from simplified network representations to highly detailed biophysical simulations. The great computational unknown of this approach is what kind of model (and thus data required) is needed to accurately represent various brain functions. Can we use reduced point neuron models like leaky-integrate-and-fire, or do we require biophysically realistic models for every cell type? Is cell type a close enough abstraction or do we also require protein and RNA abundance (or more detailed data like protein localization) in every individual cell? Recent examples include:

Data-driven biophysical models (approximated mesoscale connectome). [287] use experimental data to infer biophysical parameters, generating biophysically detailed models of diverse cortical cell types from patch-clamp recordings and morphological reconstructions. This includes detailed ion channel dynamics and dendritic computations, but is computationally intensive. [288] and [289] simulated networks of ~50,000 and ~30,000 such detailed neuronal models, respectively, to study dynamics and computations in cortical circuits. It takes ~500 CPU core-hours to simulate one second of biological time with these models (30-50k neurons). It is worth noting that there are theoretically proven methods that could lead to a ~100x speedup compared to standard serial NEURON on CPUs in simulating biologically realistic models [290].

Data-driven point neuron models (approximated connectome). [149] perform a whole-fly-brain simulation using leaky integrate-and-fire neurons whose connection patterns mirror the fly connectome. This model can recapitulate feeding and grooming behaviors that are well-studied in flies. The point neuron approach balances biological realism and computational efficiency.

Data-driven other models (without biophysical dynamics). [151] use connectome data to define an artificial neural network (ANN) model of the fly visual system, optimizing parameters to perform specific tasks in the tradition of task-driven neural networks. This allows the recapitulation of single-cell function, but lacks detailed biophysical realism. [233] directly fit input-output relationships of neural circuits from experimental recordings, without explicitly modeling underlying biophysical mechanisms.

Hybrid approaches. [291] integrate predicted connectivity with simplified dynamics for some components and detailed biophysics for others. For example, corticospinal and corticostriatal cell model morphologies had 706 and 325 compartments, but excitatory and inhibitory neurons had 6 and 3 compartments (soma, axon, dendrite). Their approach took ~96 core hours of high-performance computing time to simulate one second of biological time. [288] combined ~50,000 biophysically detailed neuron models with point-neuron models in a 230,000-neuron network model of mouse V1 and also developed a fully point-neuron version of this network, which produced results that were consistent with the biophysical simulation.

LFP models. [291, 292, 293] used point-neuron and biophysically detailed network models of cortical circuits to simulate not only neural activity, but also biophysical signals such as the Local Field Potential (LFP), which is commonly used in medicine and bioengineering (e.g., for neuroprosthetics).

Evaluation

Largely the same considerations apply to biophysically detailed models as to embodied digital twins in terms of reproducing in vivo neural physiology recordings, mimicking behavior, and identifying desirable long-term features of whole brain modeling. Biophysically detailed models additionally require that we correctly capture circuit and biophysical mechanisms (at a certain level of resolution), which is a difficult task, but comes with a benefit of helping one constrain the huge space of solutions approximating activity and behavior to those implemented in the brain’s "hardware". As such, these models represent a challenging but perhaps most direct path to creating a human-like AI implemented according to its biological substrate.

While connectomics reveals details of synaptic architecture of the brain that are crucial for accurate whole-brain models, many challenges remain with respect to other properties determining the circuit function. These include electrical and biochemical properties of neurons and synapses, effects of neuromodulators, and, perhaps most importantly, plasticity and learning rules. Current technology requires extensive experimental mapping of such properties for each of the thousands of brain cell types if not millions to billions of individual cells. Nevertheless, accelerating technological progress suggests that it might be possible to circumvent these problems by establishing statistical links between electrical and biochemical properties and structural information from connectomic data. If breakthroughs in experimental techniques and machine learning tools facilitate sufficient progress in this area, this will drastically propel this field forward and enable whole-brain models.

Opportunities

There are critical opportunities to understand the technical and conceptual questions in brain connectomics and biophysics, connecting the two via biophysically detailed models. Additional opportunities can be found in [294].

With the smallest possible organism, collect all possible data (proteomics, transcriptomics, connectomics, in vivo neurophysiology, behavior, other cell types, etc.) and carry out extensive modeling to investigate the precision of simulating circuit function from its structure and understanding what data might be missing (or, conversely, unnecessary) for accurate models. Some proposals to do this include [154, 295].

Leverage emerging light-microscopy tools to deliver connectomics datasets of whole brains that can be obtained faster and cheaper and are less challenging for computational reconstruction. With these connectomes, rapidly test less computationally intensive point neuron models and scale to biophysically realistic models.

Scale up scanning technology and associated computational/AI tools to the level of whole mammalian brains - first the mouse and later monkey and human.

Develop software foundations that can integrate biophysically detailed models with modern neural networks in a modular fashion.

Combine broad profiling of biophysical properties of brain cells and connections across cell types and brain areas with AI techniques to generate accurate models for every cell and connection recorded in the structural connectomic datasets.

Investigate neuromodulation, metabolism, and plasticity mechanisms and incorporate them in biophysically detailed models.

Leverage comparative neuroanatomy to identify the simplest organisms that have homologous deep-brain structures to mammals, so that biophysically realistic simulations can be informed by top-down approaches based on evolutionary neuropsychology.

Improve the performance and power consumption of brain simulations to enable affordable and scalable biophysically detailed models.